Credit Card Primer

This primer explains:

- Why you should have a credit card

- What a credit score is and why it's important

- How you make payments on a credit card

- How interest works on a credit card

- What to look for in a card

The short reason you need a credit card is that it protects you against emergency, fraudulent, and systemic risks that can result in you spending more money than you need to. You do not need to pay extra money to have a credit card and you do not pay interest on the things you buy with a credit card unless you fail to pay the card back on time. The perks and protection a credit card offers have the potential to save you money if you use the card correctly.

Why you should have a credit card

Capacitance

A credit card offers immediate, no-questions-asked access to significant amounts of money. This is handy in the case of emergencies where you need to purchase a thing now and can either sort out how to pay for it later or are okay with spreading payments out over a longer time frame (as detailed below).

Some potential scenarios:

You require stabilizing medication of some sort. Some conditions, such as epilepsy and depression, can give rise to serious issues if medication is suddenly interrupted. Birth control pills are similar: the consequences of losing access can be high.

You're travelling and have unexpected costs. Perhaps Eyjafjallajökull erupts and you can't fly home for several days and need to purchase accommodations. Though you'd want to check sources like Couchsurfing and friends before spending money, having something to fall back on is a good idea.

Debit cards will allow you to withdraw more money than you have, but can charge an overdraft fee of $30+ per transaction if you do so.

It increases your credit score

Credit scores track your ability to repay money you borrow. The higher your credit score is, the better. (More details below.) Having one or more credit cards for a long period of time and dutifully repaying them on time demonstrates that you can be trusted to repay the money you borrow. This means that lenders will charge you lower interest rates because lending to you is less risky for them. These lower interests rates can save you lots of money.

Renting An Apartment. A 2014 TransUnion Survey found that 48% of landlords said the results of a credit check are among the top three factors used when deciding whether or not to accept a tenant's lease application. In tight rental markets (like San Francisco), including a print-out showing a good credit score in your initial contact with a landlord can make it easier to snag a place.

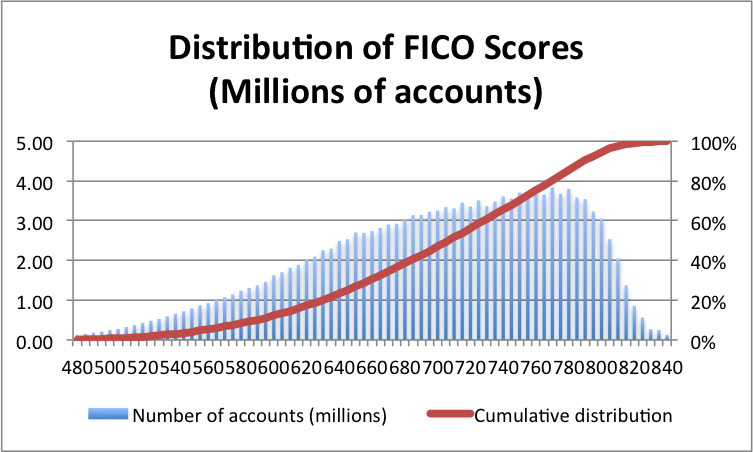

Buying A House. For a 30-year fixed loan a credit score of 620 will likely receive an APR interest rate of about 6%, according to myFICO. A credit score of 760 or above would receive an APR interest rate of about 4.39%. On a $100,000 loan, a credit score of 620 would incur $79,700 interest over a 30 year period. A credit score of 760 would incur $47,616 ($29,084 in savings).

Car Insurance. Folks with no credit pay 67% more for car insurance than folks with excellent credit (link).

Home Insurance. Folks with a poor credit score pay 150% more for home insurance than folks with an excellent credit score (link).

It provides you better fraud protection

Credit cards offer better fraud protection than debit cards. The maximum liability for fraudulent charges is $50, if the charges are reported within 60 days (and many credit cards don't even charge this). Debit cards have a $50 limit if the charges are reported within 48 hours after which the liability rises to $500. After 60 days, there is no limit.

Many other benefits

Rental Car Insurance. American Expression, alone of all the cards offers primary rental car insurance if a rental is made with your AmEx card. This costs ~$20 for the whole rental, which is cheaper than the $20/day that most car rental agencies will charge. (Link)

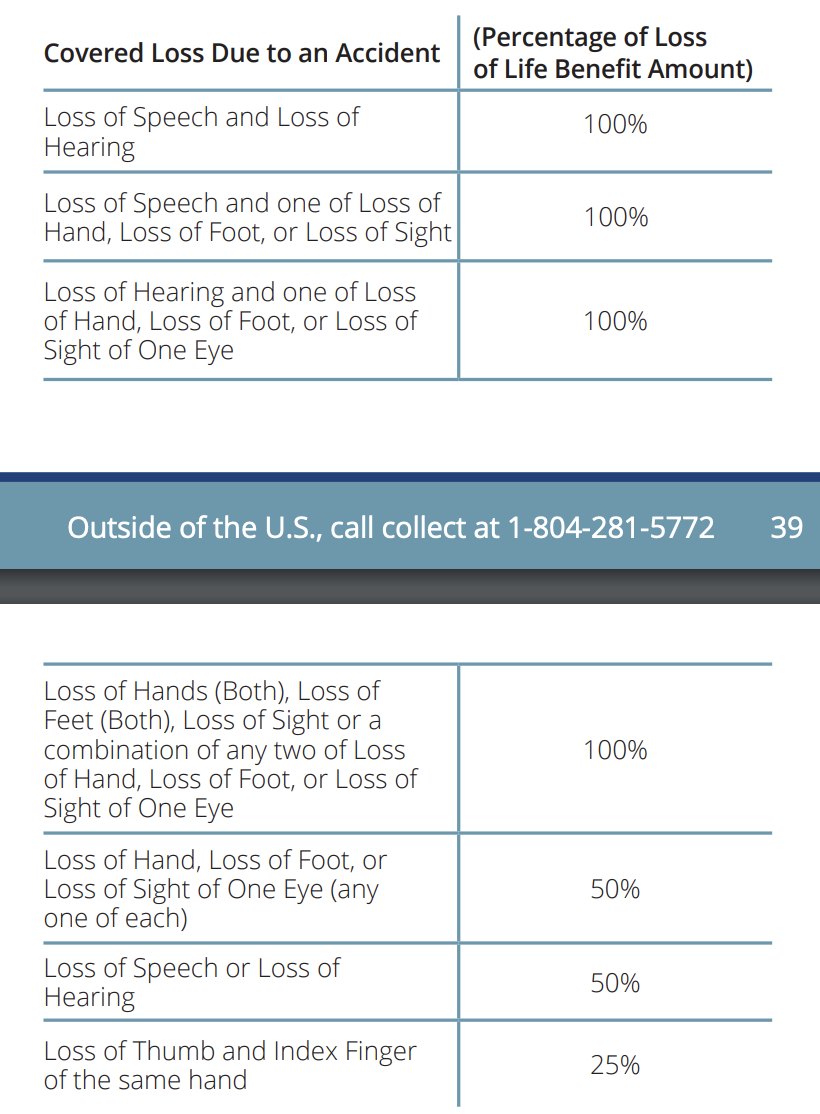

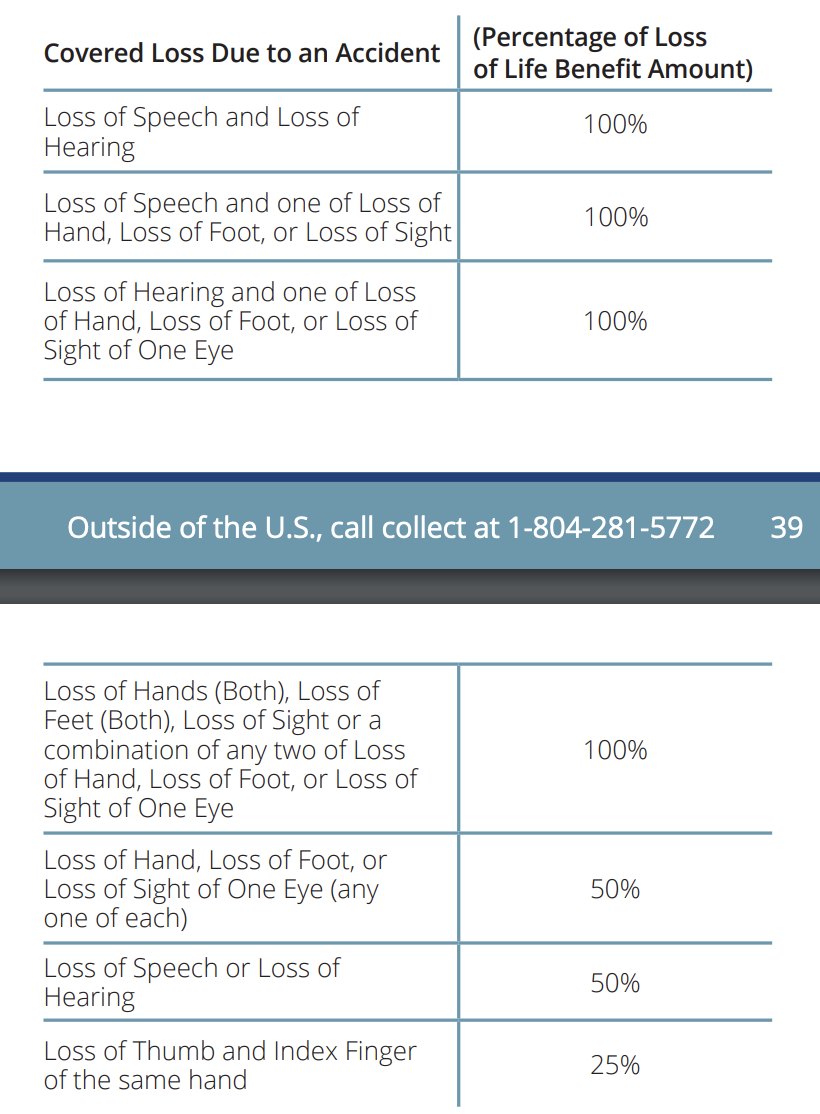

Loss of Limb. As a perk, many credit cards offer payouts if you get injured while traveling, provided you booked your trip with the credit card. For instance, Chase Sapphire Reserve will pay a percentage of $1M if you're injured while in transit and $100k if you're injured while on your trip, with the percentages determined by the table below:

The same card covers emergency medical evacuations ($100k), a friend/family flying to visit if your hospitalized while traveling for an extended period, a new plane ticket if you can't make your original return flight, and even money to fly your body home if you die, at which point the $100-500k life insurance would kick in.

Perks. Many credit cards offer "points" if you spend money on qualifying purchases. It is rarely a good idea to think about these too much. Rather, find a credit card that offers points for things you buy regularly (I use airmiles cards) and forget about them. Alternatively, get a card that offers some % cash back.

Misc

- A credit card is often required to rent a car or to get a hotel room. While this can sometimes be done with a debit card, the renting agency may place a hold on $250+ of your funds for the duration of your use and for a number of days afterwards. In the case of a debit card, this can hamper your ability to pay for things. In the case of a credit card you usually don't have to worry about it.

What's a credit score?

The above makes it clear that credit scores can have a significant financial impact. Credit scores track your ability to repay money you borrow. The higher your credit score is, the better.

A credit score is determined by combining multiple factors together into a single number that ranges from 300 to 850.

When you first get access to credit, you're likely to start with a score near 300. This is bad because it exposes you to higher interest rates and can make it more difficult to acquire housing. Therefore, building a good credit score as soon as possible is a good idea.

Credit scores are calculated by combining several factors:

- Payment history (35% weight). Late payments weight heavily against you.

- Total amount owed (30% weight). Large outstanding balances weight against you. This is known as credit utilization.

- Length of credit history (15% weight). If you've only had a card for a month this is not as strong a signal about your ability to borrow wisely than if you've had a card for a long time.

- Types of credit (10% weight). A mixture of credit types can be a positive.

- New/recent credit (10% weight). Applying for credit or opening new credit cards can result in a temporary dip in credit scores as it may indicate trying to acquire money you don't otherwise have and it's difficult to distinguish doing this responsibly versus desperately.

Student loans count towards all of this. If you don't have student loans, then having a credit card is more important because you are not otherwise establishing a credit history.

You can check your credit score at Credit Karma, Annual Free Credit Report, and Mint.

How do you improve your credit score?

Given the above, you improve your credit score by:

- Paying your bills on time. Several months of on-time payments are required to see a noticeable difference in your score.

- Decrease your credit utilization: the ratio of how much you've borrowed versus how much you can borrow (your credit limit). You do this by either increasing your credit limit by calling your credit card company or poking at their website or, and this is often easier, by getting an additional credit card.

- Have more credit accounts. Getting an additional credit card is often an easy way to do this. Student loans also count.

You can check your credit score at Credit Karma, Annual Free Credit Report, and Mint.

How does paying with a credit card work?

You use the card to pay for things in one month and then pay off at the end of the next month (or billing cycle).

So, everything I buy with a credit card in January I don't have to pay for until the end of February. Meanwhile, everything I buy in February I don't have to pay for until the end of March.

Thus, the best case scenario is that if I buy something unaffordable to cover some sort of emergency, I will have 60 days to pay it off. In the worst case scenario, I'll have 30 days.

What if I don't pay off the card?

When your credit card bill is due you can:

- Pay nothing

- Pay less than the minimum amount

- Make the minimum payment

- Pay an intermediate amount

- Pay the entire balance

Options (1) and (2) are very, very bad and will hurt your credit score. Always pay at least the minimum amount.

If you pay the minimum or an intermediate amount, you will incur interest on the amount you didn't pay (see below).

If you pay the entire balance no interest is incurred and it's like you're getting all the many benefits of the credit card for free (as long as there's not a yearly charge).

How does interest work?

If you don't pay the entire balance due on a credit card, the remaining balance incurs interest. This interest is known as the APR (annual percentage rate) and is lower if you have a good credit score and higher if you have a bad credit score.

Credit cards have high interest rates, often around 18%. In contrast, most bank accounts have interest rates (that help you make money) of <1% and the stock market usually offers returns of 6-8%. This means it takes much longer to make money with investments than it takes to lose money by mismanaging a credit card.

As an example, if you can't afford to pay $100 of your credit bill, then the interest charged would be about $10018/1001/12=$1.50. This doesn't seem like too much, but the average American has $6,194 of credit debt, which is about $92.91 interest per month. If you're consistently unable to make payments on a credit card, the amount of money you owe and, therefore, the interest, will keep increasing.

So, carrying a balance on a credit card from one month to the next isn't the end of the world (as long as you pay the minimum), but it's also not a great thing to do, especially if the balance is high. Regularly carrying a balance can spiral into significant debt.

How do you apply for a credit card?

Go to a website and give them the info they want. The credit card comes in the mail a week later.

What card to get?

If you're new to credit cards or relatively poor, you are looking for four things:

- The credit card does not charge an annual fee (these are often ~$100).

- The credit card offers perks for things you purchase regularly (e.g., groceries, airplane tickets, Amazon)

- The credit card has a nice app or interface that makes it easy to understand what you've spent and what you owe.

- The credit card can synchronize with money management and budgeting tools like Mint.

The DiscoverIt Student Card is probably a good choice as is Chase Freedom.

There are also secured credit cards that allow you to pay the card money and then use it like a regular credit card up to that amount of money. This is unlike a debit card, where the money you have gets used up by transactions.